Les Bell and Associates Pty Ltd

Blog entries about Les Bell and Associates Pty Ltd

NAS Vendor QNAP has released fixes for two remote command execution vulnerabilities in their QTS, QuTS hero and QuTS cloud operating systems. The vulnerabilities are:

- CVE-2023-23368 (CVSS 3.1 score: 9.8), a command injection vulnerability affecting:

- QTS 5.0.x (fixed in QTS 5.0.1.2376 build 20230421 and later)

- QTS 4.5.x (fixed in QTS 4.5.4.2374 build 20230416 and later)

- QuTS hero h5.0.x (fixed in QuTS hero h5.0.1.2376 build 20230421 and later)

- QuTS hero h4.5.x (fixed in QuTS hero h4.5.4.2374 build 20230417 and later)

- QuTScloud c5.0.x (fixed in QuTScloud c5.0.1.2374 and later)

- CVE-2023-23369 (CVSS 3.1 score 9.8), a command injection vulnerability affecting:

- QTS 5.1.x (fixed in QTS 5.1.0.2399 build 20230515 and later)

- QTS 4.3.6 (fixed in QTS 4.3.6.2441 build 20230621 and later)

- QTS 4.3.4 (fixed in QTS 4.3.4.2451 build 20230621 and later)

- QTS 4.3.3 (fixed in QTS 4.3.3.2420 build 20230621 and later)

- QTS 4.2.x (fixed in QTS 4.2.6 build 20230621 and later)

- Multimedia Console 2.1.x (fixed in Multimedia Console 2.1.2 (2023/05/04) and later)

- Multimedia Console 1.4.x (fixed in Multimedia Console 1.4.8 (2023/05/05) and later)

- Media Streaming add-on 500.1.x (fixed in Media Streaming add-on 500.1.1.2 (2023/06/12) and later)

- Media Streaming add-on 500.0.x (fixed in Media Streaming add-on 500.0.0.11 (2023/06/16) and later)

These are all fairly old versions of the software, as revealed by the version numbers of the fixed updates, and so most well-maintained installations will be unaffected - and those that are should obviously update immediately.

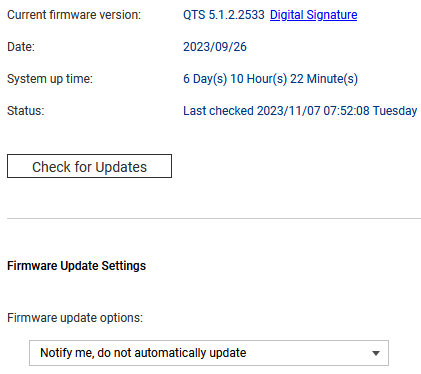

After all, the beauty of NAS appliances such as those from QNAP and Synology is that admins don't need to get down in the weeds with Linux command-line administration - but they do have to still follow good admin practices such as proactive patching. On the QNAP devices, this is as simple as logging in with an admin account, opening the Control Panel, selecting Firmware Update and clicking the "Check for Updates" button, which will kick off the update process (right). And it's not even necessary to log in regularly - the server can notify you or even automatically install critical updates. It's also possible to do this from your phone, via the Qmanager app.

After all, the beauty of NAS appliances such as those from QNAP and Synology is that admins don't need to get down in the weeds with Linux command-line administration - but they do have to still follow good admin practices such as proactive patching. On the QNAP devices, this is as simple as logging in with an admin account, opening the Control Panel, selecting Firmware Update and clicking the "Check for Updates" button, which will kick off the update process (right). And it's not even necessary to log in regularly - the server can notify you or even automatically install critical updates. It's also possible to do this from your phone, via the Qmanager app.

Really, people, there are no excuses . . .

QNAP Inc., Vulnerability in QTS, QuTS hero, and QuTScloud, security advisory QSA-23-31, 4 November 2023. Available online at https://www.qnap.com/en-uk/security-advisory/qsa-23-31.

QNAP Inc., Vulnerability in QTS, Multimedia Console, and Media Streaming add-on, security advisory QSA-23-35, 4 November 2023. Available online at https://www.qnap.com/en-uk/security-advisory/qsa-23-35,

Upcoming Courses

- SE221 CISSP Fast Track Review, Virtual/Online, 13 - 17 November 2023

- SE221 CISSP Fast Track Review, Sydney, 4 - 8 December 2023

- SE221 CISSP Fast Track Review, Sydney, 11 - 15 March 2024

- SE221 CISSP Fast Track Review, Virtual/Online, 13 - 17 May 2024

- SE221 CISSP Fast Track Review, Virtual/Online, 17 - 21 June 2024

- SE221 CISSP Fast Track Review, Sydney, 22 - 26 July 2024

About this Blog

I produce this blog while updating the course notes for various courses. Links within a story mostly lead to further details in those course notes, and will only be accessible if you are enrolled in the corresponding course. This is a shallow ploy to encourage ongoing study by our students. However, each item ends with a link to the original source.

These blog posts are collected at https://www.lesbell.com.au/blog/index.php?user=3. If you would prefer an RSS feed for your reader, the feed can be found at https://www.lesbell.com.au/rss/file.php/1/dd977d83ae51998b0b79799c822ac0a1/blog/user/3/rss.xml.

![]()

Copyright to linked articles is held by their individual authors or publishers. Our commentary is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License and is labeled TLP:CLEAR.

Copyright to linked articles is held by their individual authors or publishers. Our commentary is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License and is labeled TLP:CLEAR.

Trend Micro's Zero Day Initiative has disclosed four new 0-day vulnerabilities in Microsoft's Exchange server. The vulnerabilities are:

- ZDI-23-1578 - Microsoft Exchange ChainedSerializationBinder Deserialization of Untrusted Data Remote Code Execution Vulnerability (CVSS 3.0 score: 7.5)

ZDI-23-1579 - Microsoft Exchange DownloadDataFromUri Server-Side Request Forgery Information Disclosure Vulnerability (CVSS 3.0 score: 7.1)

ZDI-23-1580 - Microsoft Exchange DownloadDataFromOfficeMarketPlace Server-Side Request Forgery Information Disclosure Vulnerability (CVSS v3.0 score: 7.1)

ZDI-23-1581 - Microsoft Exchange CreateAttachmentFromUri Server-Side Request Forgery Information Disclosure Vulnerability (CVSS v3.0 score: 7.1)

The most serious of these is obviously ZDI-23-1578, which is described like this:

This vulnerability allows remote attackers to execute arbitrary code on affected installations of Microsoft Exchange. Authentication is required to exploit this vulnerability.

The specific flaw exists within the ChainedSerializationBinder class. The issue results from the lack of proper validation of user-supplied data, which can result in deserialization of untrusted data. An attacker can leverage this vulnerability to execute code in the context of SYSTEM.

Trend Micro reported the vulnerability to Microsoft on 7 September; however Microsoft replied on 27 September:

The vendor states that the vulnerability does not require immediate servicing.

The company responded to the other vulnerabilities in the same way. I'm really not so sure about that. I'd be willing to be that a number of threat actors are sitting on Exchange user credentials which they have acquired via phishing, infostealers or other exploits, and they could make use of these credentials to authenticate and then run an exploit based on one or more of these vulnerabilities. After all, the vulnerable classes and methods are identified right there, in the advisories. And if a threat actor doesn't have the necessary credentials, this gives them motivation to go phishing.

(Photo by Pascal Müller on Unsplash)

As the ZDI reports make clear, "Given the nature of the vulnerability, the only salient mitigation strategy is to restrict interaction with the application.". I'd also be making my Exchange users a bit more aware of the possibilities of phishing attacks, and watching Exchange servers like a hawk.

Bazydlo, Piotr, ZDI Published Advisories, advisories list, 2 November 2023. Available online at https://www.zerodayinitiative.com/advisories/published/.

Upcoming Courses

- SE221 CISSP Fast Track Review, Virtual/Online, 13 - 17 November 2023

- SE221 CISSP Fast Track Review, Sydney, 4 - 8 December 2023

- SE221 CISSP Fast Track Review, Sydney, 11 - 15 March 2024

- SE221 CISSP Fast Track Review, Virtual/Online, 13 - 17 May 2024

- SE221 CISSP Fast Track Review, Virtual/Online, 17 - 21 June 2024

- SE221 CISSP Fast Track Review, Sydney, 22 - 26 July 2024

About this Blog

I produce this blog while updating the course notes for various courses. Links within a story mostly lead to further details in those course notes, and will only be accessible if you are enrolled in the corresponding course. This is a shallow ploy to encourage ongoing study by our students. However, each item ends with a link to the original source.

These blog posts are collected at https://www.lesbell.com.au/blog/index.php?user=3. If you would prefer an RSS feed for your reader, the feed can be found at https://www.lesbell.com.au/rss/file.php/1/dd977d83ae51998b0b79799c822ac0a1/blog/user/3/rss.xml.

![]()

Copyright to linked articles is held by their individual authors or publishers. Our commentary is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License and is labeled TLP:CLEAR.

Copyright to linked articles is held by their individual authors or publishers. Our commentary is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License and is labeled TLP:CLEAR.

(Photo by Tayla Kohler on Unsplash)

"Trojan Horse" is one of many cybersecurity terms that is overloaded with multiple meanings ("sandbox" is another). In the early security literature, a trojan horse was a pair of programs which could bypass the rules of a multi-level security system, sneaking information from a high security level (like TOPSECRET) to a low security level (like UNCLASSIFIED) which would allow it to be removed from the system and its secure environment.

Later, the term was reused for other purposes - one of them being a program that looks like it does one thing, but really does another. The stereotypical example is a program that emulates the login process on a UNIX system, but actually captures the user's credentials. Wait till the victim goes to lunch, having failed to log out, then run the program on their terminal session, and when they return from lunch, they'll assume they logged out, will log in again and voila! You've captured their password.

These days, the range of trojans is much greater. Many masquerade as mobile device apps, occasionally making it past static checks and into official app stores. Others pose as unlocked or free versions of popular programs - even open source programs that are free to download from their official sites only (Googling for software downloads is dangerous as threat actors will pay advertising fees to get their trojans inserted at the top of the results).

The additional functions of these trojans vary as well: some are infostealers, some are droppers, some are backdoors. Malware analysts routinely examine trojan code to figure out what they do, using static analysis, disassembly or running them in a sandbox to safely observe their behaviour.

And so it was with a malware sample which was first detected back in 2017, which analysts had classified as a Monero cryptocurrency miner. This kind of thing does not pose a major threat - mainly, it's stealing CPU cycles and eventually the user will notice, figure out what's going on, kill it and remove it. No big deal, and nobody took much notice.

The years passed, until Kaspersky researchers unexpectedly detected a signature within the WININT.EXE process of a malware sample associated with the Equation group. As they looked deeper, they traced back through previous examples, right back to this original suspicious code of 2017. Deciding to perform a detailed analysis revealed something completely unexpected: the cryptocurrency miner was just one component of something much larger.

They discovered that the malware used the EternalBlue SMBv1 exploit, which was first disclosed in April 2017 when the ShadowBrokers group tried to auction what they claimed was a library of exploits stolen from the NSA. But it was much more sophisticated than other malware that used the same exploit - much stealhier, and much more complex.

In fact, this malware family, which the Kaspersky researchers dubbed StripedFly, can propagate within a network using not only EternalBlue, but also the SSH protocol. Infection starts with injection of shellcode which can download binary files from bitbucket[.]org and execute PowerShell scripts. It then injects additional shellcode, deplying a payload consisting of an expandable framework with plugin functionality, including an extremely lightweight Tor network client.

The malware achieves persistence in various ways; if it can run PowerShell scripts with admin privileges, it will create task scheduler entries, but if running with user privileges, it inserts an obfuscated registry entry. Either way, it stores the body of the malware in another base64-encoded registry key. If it cannot run PowerShell scripts - for example, if it infected the system via the Cygwin SSH server - then it creates a hidden file with a randomized name in the %APPDATA% directory.

The download repository on Bitbucket was created in 2018, and contains updated versions of the malware. The main C2 server, however, is on the Tor network; the malware connects to it at regular intervals, sending beacon messages. The various modules which extend the malware register callbacks, which are triggered on initial connection to the C2 server, or when a C2 message is received - an architectural hallmark of APT malware.

The modules can be used to upgrade or uninstall the malware, capture user credentials (including wifi network names and passwords, SSH, FTP and WebDav credentials), take screenshots, execute commands, record microphone input, gather specific files, enumerate system information and - of course - mine Monero cryptocurrency.

In short, this is a very sophisticated piece of malware, and not the simple cryptominer it was originally believed to be. Somehow it has flown under the radar for many years, remaining largely undetected. Just who is behind it, and what their objectives may be, is far from clear.

The Kaspersky report provides a detailed analysis and IOC's, along with an amusing side-note concerning a related piece of malware called ThunderCrypt.

Belov, Sergey, Vilen Kamalov and Sergey Lozhkin, StripedFly: Perennially flying under the radar, blog post, 26 October 2023. Available online at https://securelist.com/stripedfly-perennially-flying-under-the-radar/110903/.

New Blog Format

Regular readers will notice the changed format of this blog; rather than aggregating several stories in one post, we have switched to posting individual stories with a more informative title. As before, links within a story mostly lead to further details in the course notes of some of our courses, and will only be accessible if you are enrolled in the corresponding course - this is a shallow ploy to encourage ongoing study. However, each item ends with a link to the original source.

Upcoming Courses

- SE221 CISSP Fast Track Review, Virtual/Online, 13 - 17 November 2023

- SE221 CISSP Fast Track Review, Sydney, 4 - 8 December 2023

- SE221 CISSP Fast Track Review, Sydney, 11 - 15 March 2024

- SE221 CISSP Fast Track Review, Virtual/Online, 13 - 17 May 2024

- SE221 CISSP Fast Track Review, Virtual/Online, 17 - 21 June 2024

- SE221 CISSP Fast Track Review, Sydney, 22 - 26 July 2024

These blog posts are collected at https://www.lesbell.com.au/blog/index.php?user=3. If you would prefer an RSS feed for your reader, the feed can be found at https://www.lesbell.com.au/rss/file.php/1/dd977d83ae51998b0b79799c822ac0a1/blog/user/3/rss.xml.

![]()

Copyright to linked articles is held by their individual authors or publishers. Our commentary is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License and is labeled TLP:CLEAR.

Copyright to linked articles is held by their individual authors or publishers. Our commentary is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License and is labeled TLP:CLEAR.

Welcome to today's daily briefing on security news relevant to our CISSP (and other) courses. Links within stories may lead to further details in the course notes of some of our courses, and will only be accessible if you are enrolled in the corresponding course - this is a shallow ploy to encourage ongoing study. However, each item ends with a link to the original source.

Site Maintenance

This site will be offline intermittently over the weekend of Saturday 4 and Sunday 5 November as we migrate to a new server. Normal, stable service will resume at 8 am AEDT (Sydney time) on Monday 6 November.

News Stories

FIRST Publishes CVSS v4.0

FIRST - the Forum of Incident Response and Security Teams - has published version 4.0 of the Common Vulnerability Scoring System (CVSS). A draft of the standard was previewed back in June at the FIRST Conference in Montreal, and this was followed by two months of public comments and two months of work to address the feedback, culminating in the final publication.

CVSS is a key standard used for calculation and exchange of severity information about vulnerabilities, and is an essential component of vulnerability management systems, particularly the prioritization of remediation efforts. Says FIRST:

"The revised standard offers finer granularity in base metrics for consumers, removes downstream scoring ambiguity, simplifies threat metrics, and enhances the effectiveness of assessing environment-specific security requirements as well as compensating controls. In addition, several supplemental metrics for vulnerability assessment have been added including Automatable (wormable), Recovery (resilience), Value Density, Vulnerability Response Effort and Provider Urgency. A key enhancement to CVSS v4.0 is also the additional applicability to OT/ICS/IoT, with Safety metrics and values added to both the Supplemental and Environmental metric groups."

A key part of the update is new nomenclature which will enhance the usability of CVSS in automated threat intelligence, particularly emphasizing that CVSS is not just the base score which is the most widely quoted, The new nomenclature distinguishes:

- CVSS-B: CVSS Base Score

- CVSS-BT: CVSS Base + Threat Score

- CVSS-BE: CVSS Base + Environmental Score

- CVSS-BTE: CVSS Base + Threat + Environmental Score

The base score provides information about the severity of the vulnerability itself. But in order to assess the risk posed by potential exploitation, it is necessary to take into account information specific to the defender's environment, such as the existence of compensating controls, not to mention the value of exposed assets and likely impact of exploitation. Some of these are expressed in the Environmental Metric group, which encompasses the CIA security requirements of the vulnerable system along with modifications to the Base Metrics as appropriate for the environment. Similarly, it is up to the user to use information about the maturity and availability of exploits and the skill level of likely threat actors in deriving a Threat Score. I expect some risk management professionals are already busy mapping these to elements of the FAIR ontology, such as Threat Capability and Resistance Strength.

Additional Supplemental Metrics can now be used to convey additional extrinsic attributes of a vulnerability, such as safety impacts, lack of automatibility and the effectiveness of recovery controls, that do not affect the final CVSS-BTE score.

CVSS 4.0 is extensively documented, and FIRST has even developed an online training course, which can be found at https://learn.first.org/.

Uncredited, FIRST has officially published the latest version of the Common Vulnerability Scoring System (CVSS v4.0), press release, 1 November 2023. Available online at https://www.first.org/newsroom/releases/20231101.

Dugal, Dave and Rich Dale (chairs), Common Vulnerability Scoring System Version 4.0, web documenation page, 1 November 2023. Available online at https://www.first.org/cvss/v4-0/index.html.

CVSS v4.0 Calculator: https://www.first.org/cvss/calculator/4.0.

Still More AI Hallucination Embarassment

Regular readers might remember the scandal that erupted earlier this year when it was revealed that Big 4 consultancy PwC had been providing its clients with inside information about tax reforms which had been acquired in its role of advisor to the Australian Taxation Office. As we commented at the time, this would have been an egregious failure of the Brewer-Nash security model, better known as the Chinese Wall model, were it not for the fact that there was obviously no implementation of it in the first place.

That outrage surrounding this prompted a parliamentary enquiry into the ethics and professional accountability of the big audit and consulting firms more generally, with all the Big Four coming under sustained criticism for their practices. Now that enquiry has given us yet another example of how the uncritical use of large language models can give rise to major embarassment.

One of the public submissions to the parliamentary enquiry was prepared by a group of accounting academics who ripped into the several of Big Four, accusing KPMG of being complicit in a "KPMG 7-Eleven wage theft scandal" culminating in the resignation of several partners and claiming the firm audited the Commonwealth Bank during a financial planning scandal. Their submission also claimed that Deloitte was being sued by the liquidators of collapsed construction firm Probuild for failing to audit the firm's accounts properly, and also accused Deloitte of falsifying the accounts of a company called Patisserie Valerie.

Here's the problem: KPMG was not involved in 7-Eleven's scandal and never audited the Commonwealth Bank, while Deloitte never audited either Probuild or Patisserie Valerie.

So why did these academics accuse the firms? You guessed it: part of their submission was generated by an AI large language model, specifically Google's Bard, which the authors had used to create several case studies about misconduct.

The result was certainly plausible - the inquiry was, after all, instituted in response to similar, real cases - but the case studies it created were the result of classic LLM "hallucination", and the academics have now been forced to apologise and withdraw their work, submitting a new version.

It's going to be hard for their university to enforce their policy on academic integrity when even emeritus professors don't do their homework properly. We grade this paper as an "F" - failure to properly cite sources; possible plagiarism?

Belot, Henry, Australian academics apologise for false AI-generated allegations against big four consultancy firms, The Guardian, 2 November 2023. Available online at https://www.theguardian.com/business/2023/nov/02/australian-academics-apologise-for-false-ai-generated-allegations-against-big-four-consultancy-firms.

Upcoming Courses

- SE221 CISSP Fast Track Review, Virtual/Online, 13 - 17 November 2023

- SE221 CISSP Fast Track Review, Sydney, 4 - 8 December 2023

- SE221 CISSP Fast Track Review, Sydney, 11 - 15 March 2024

- SE221 CISSP Fast Track Review, Virtual/Online, 13 - 17 May 2024

- SE221 CISSP Fast Track Review, Virtual/Online, 17 - 21 June 2024

- SE221 CISSP Fast Track Review, Sydney, 22 - 26 July 2024

These news brief blog articles are collected at https://www.lesbell.com.au/blog/index.php?courseid=1. If you would prefer an RSS feed for your reader, the feed can be found at https://www.lesbell.com.au/rss/file.php/1/dd977d83ae51998b0b79799c822ac0a1/blog/user/3/rss.xml.

![]()

Copyright to linked articles is held by their individual authors or publishers. Our commentary is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License and is labeled TLP:CLEAR.

Copyright to linked articles is held by their individual authors or publishers. Our commentary is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License and is labeled TLP:CLEAR.

Welcome to today's daily briefing on security news relevant to our CISSP (and other) courses. Links within stories may lead to further details in the course notes of some of our courses, and will only be accessible if you are enrolled in the corresponding course - this is a shallow ploy to encourage ongoing study. However, each item ends with a link to the original source.

News Stories

Updated Guidance on Cisco IOS XE Vulnerabilities

In mid-October we reported on a 0-day vulnerability, CVE-2023-20198, which was being exploited in the wild. This vulnerability was in the web UI of Cisco's IOS XE operating system, and sported a CVSS 3.1 score of 10.0, which is guaranteed to get the attention of network admins. More recently, this vuln was joined by CVE-2023-20273, an input sanitization failure which allows an authenticated attacker to perform remote command execution with root privileges via crafted input. The CVSS score this time is only 7.2, so it's not quite as bad, but still . . .

Now Cisco has updated its advisory on these, recommending that admins check whether the web server is running with the command

show running-config | include ip http server|secure|active

If the output contains either of

ip http server

or

ip http secure-server

then the web UI is enabled. However, the presence of either ip http active-session-modules none or ip http secure-active-session-modules none indicates that the vulnerabilities are not exploitable via either HTTP or HTTPS, respectively.

Cisco's security advisory suggests a number of mitigations, principally disabling the web server or at least restricting access to a trusted network only, but the real fix is to download and install the appropriate software updates.

Cisco, Cisco IOS XE Software Web UI Privilege Escalation Vulnerability, security advisory, 1 November 2023. Available online at https://sec.cloudapps.cisco.com/security/center/content/CiscoSecurityAdvisory/cisco-sa-iosxe-webui-privesc-j22SaA4z.

US and Allies Up the Ante Against Ransomware

Via Reuters come reports that the White House is finalizing a new policy on government responses to ransomware attacks, and will share information on ransomware groups, including the accounts they use for ransom collection. The policy was announced during this week's International Counter Ransomware Initiative. Two information-sharing platforms will be created, one by Lithuania and the other jointly by Israel and the United Arab Emirates. These will share a blacklist, managed by the US Department of Treasury, of cybercurrency wallets being used to transfter ransomware payments.

The massive revenues collected by ransomware operators has been used to fund the development of new exploits and malware, creating a feedback loop that further increases their profitability. As a result, in recent years, ransomware has been the number one concern of CISO's globally. Security professionals generally agree that the only way to break this loop is to simply refuse to pay any ransoms, a decision that must weigh heavily on organizations that necessarily process personal - especially sensitive personal - information. When such organizations refuse to pay an extortion demand from an operator which has exfiltrated personal information, the extortionists will often turn their focus to the individual victims, as happened last week to the parents of children in the Clark County (Las Vegas) school district.

One option that is perennially raised is that governments should make ransomware payments illegal, a somewhat drastic step that would lift the moral burden of not paying off the affected organizations - but may well transfer the harm to the individual victims who usually have absolutely no responsibility for a breach. At the International Counter Ransomware Initiative meeting, forty countries pledged never to pay a ransom to cybercriminals, although this seems to apply to the governments themselves.

Clearly, the best option by far is to never fall victim to a ransomware attack in the first place - something that is obviously much more easily said than done.

Hunnicutt, Trevor and Zeba Siddiqui, White House to share ransomware data with allies -source, Reuters, 31 October 2023. Available online at https://www.reuters.com/technology/white-house-share-ransomware-data-with-allies-source-2023-10-30/.

Siddiqui, Zeba, Alliance of 40 countries to vow not to pay ransom to cybercriminals, US says, Reuters, 1 November 2023. Available online at https://www.reuters.com/technology/alliance-40-countries-vow-not-pay-ransom-cybercriminals-us-says-2023-10-31/.

Wootton-Greener, Julie, Some CCSD parents get suspicious email with information about their kids, Las Vegas Review-Journal, 26 October 2023. Available online at https://www.reviewjournal.com/local/education/some-ccsd-parents-get-suspicious-email-with-information-about-their-kids-2928929/.

Arid Viper Spreads Android Malware Disguides as Dating App

Just a couple of days ago we reported on the activities of the Hamas-aligned hacktivist group, Arid Viper. Cisco Talos now reports that the group has been running an 18-month campaign which targets Arabic-speaking Android users with malicious .apk (Android package) files.

Curiously, the malware is very similar to an apparently legitimate online dating application referred to as "Skipped", e.g. "Skipped - Chat, Match & Dating", specifically using a similar name and the same shared project on the app's development platform. This suggests that Arid Viper is actually behind the dating app, or somehow gained unauthorized access to its development platform. However, Skipped is only one of many linked fake dating apps for both Android and iOS, including VIVIO, Meeted and Joostly, suggesting that Arid Viper may make use of these apps in future campaigns. Arid Viper is known to have used similar "honey trap" tactics on Android, iOS and Windows in the past.

Under the covers, the malware uses Google's Firebase messaging platform as a C2 channel, and has the ability to gather system information, exfiltrate credentials, record, send and receive calls and text messages, record contacts and call history, exfitrate files, switch to a new C2 domain and also to download and execute additional trojanized apps.

Cisco Talos, Arid Viper disguising mobile spyware as updates for non-malicious Android applications, technical report, 31 October 2023. Available online at https://blog.talosintelligence.com/arid-viper-mobile-spyware/.

Surge in Office Add-ins Used as Droppers, Reports HP

In their Threat Insights Report for Q3 of 2023, HP Wolf Security reports a surge in the use of Excel add-in (.xlam) malware, which has risen from the 46th place in malware delivery file types in Q2 to 7th place in Q3. In many campaigns, the threat actors use an Excel add-in as a dropper, often carrying the Parallax RAT as a payload. The victims were lured into opening malicious email attachments containing Excel add-ins claiming to be scanned invoices, generally sent from compromised email accounts.

When the victim opens the attachment, it runs the Excel xlAutoOpen() function, which in turn makes use of various system libraries as a LOLbin strategy, making it harder for static analysis to flag the add-in as malware. The malware starts two threads: one creates an executable file called lum.exe and runs it, while the other creates a decoy invoice called Invoice.xlsx and opens it to distract the user. Meanwhile, lum.exe unpacks itself in memory and then uses process hollowing to take over another process. It also creates a copy of lum.exe in the AppData Startup folder to achieve persistence.

The malware itself is Parallax RAT, which can remotely control the infected PC, steal login credentials, upload and download files, etc. It requires no C2 infrastructure or support, which makes it easy for novice cybercriminals, can can be rented for only $US65 per month via the hacking forums where it is advertised.

Other tactics observed by HP's researchers include using malicious macro-enabled Powerpoint add-ins (.ppam) to spread XWorm malware and a campaign in which a threat actor lured novice cybercriminals into installing fake RAT's, thereby getting them to infect themselves with malware. Honestly, is there no honour among thieves any more?

HP Wolf Security, Threat Insights Report, Q3 - 2023, technical report, October 2023. Available online at https://threatresearch.ext.hp.com/hp-wolf-security-threat-insights-report-q3-2023/.

Upcoming Courses

- SE221 CISSP Fast Track Review, Virtual/Online, 13 - 17 November 2023

- SE221 CISSP Fast Track Review, Sydney, 4 - 8 December 2023

- SE221 CISSP Fast Track Review, Sydney, 11 - 15 March 2024

- SE221 CISSP Fast Track Review, Virtual/Online, 13 - 17 May 2024

- SE221 CISSP Fast Track Review, Virtual/Online, 17 - 21 June 2024

- SE221 CISSP Fast Track Review, Sydney, 22 - 26 July 2024

These news brief blog articles are collected at https://www.lesbell.com.au/blog/index.php?courseid=1. If you would prefer an RSS feed for your reader, the feed can be found at https://www.lesbell.com.au/rss/file.php/1/dd977d83ae51998b0b79799c822ac0a1/blog/user/3/rss.xml.

![]()

Copyright to linked articles is held by their individual authors or publishers. Our commentary is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License and is labeled TLP:CLEAR.

Copyright to linked articles is held by their individual authors or publishers. Our commentary is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License and is labeled TLP:CLEAR.

Welcome to today's daily briefing on security news relevant to our CISSP (and other) courses. Links within stories may lead to further details in the course notes of some of our courses, and will only be accessible if you are enrolled in the corresponding course - this is a shallow ploy to encourage ongoing study. However, each item ends with a link to the original source.

News Stories

SEC Charges SolarWinds and its CISO

The US Securities and Exchange Commission has announced charges against Texas network management software firm SolarWinds and its Chief Information Security Officer, Timothy G. Brown, following the legendary Sunburst attack on the firm and the customers using its Orion software. The SEC alleges that SolarWinds misled investors by disclosing only generic, hypothetical risks when in fact Brown, and the company management, knew of specific shortcomings in the company's controls, as well as the elevated level of risk the company faced at the time.

The complaint allegest that, for example, 'a 2018 presentation prepared by a company engineer and shared internally, including with Brown, that SolarWinds’ remote access set-up was “not very secure” and that someone exploiting the vulnerability “can basically do whatever without us detecting it until it’s too late,” which could lead to “major reputation and financial loss” for SolarWinds.'

Similarly, the SEC alleges that in 2018 and 2019 presentations, Brown stated that 'the “current state of security leaves us in a very vulnerable state for our critical assets” and that “[a]ccess and privilege to critical systems/data is inappropriate.”'.

The SEC claims that Brown was aware of the vulnerabilities and risks but failed to adquately address them or, in some cases, raise them further within the company.

There's a lot more in the SEC's press release and doubtless in the court filings.

There's a lesson in this for CISO's everywhere. We have long recommended the involvement of both security personnel - who can assess the strength of controls and likelihood of vulnerability exploitation - and the relevant information asset owners - who can assess loss magnitude or impact - in both the evaluation of risk and, very importantly, the selection of controls which will mitigate risk to a level acceptable to the information asset owner. What is an acceptable level of risk is a business decision, not a security one, and it needs to be balanced against opportunities which lie firmly on the business side of the risk taxonomy - so it is one that the information asset owner has to make.

What this suit makes clear is that fines and judgements are an increasingly significant component of breach impact. The result should be a new clarity of thought about cyber risk management and an increased willingness of management to engage in the process. With this increased impact should come an increased willingness to fund controls.

All this gives a bit more leverage for security professionals to get the job done properly. But as an added incentive for honesty and clarity all round, I'd suggest capturing all risk acceptance decisions formally - if not on paper, then at least with an email trail. You never know when this could prove useful.

SEC, SEC Charges SolarWinds and Chief Information Security Officer with Fraud, Internal Control Failures, press release, 30 October 2023. Available online at https://www.sec.gov/news/press-release/2023-227.

Upcoming Courses

- SE221 CISSP Fast Track Review, Virtual/Online, 13 - 17 November 2023

- SE221 CISSP Fast Track Review, Sydney, 4 - 8 December 2023

- SE221 CISSP Fast Track Review, Sydney, 11 - 15 March 2024

- SE221 CISSP Fast Track Review, Virtual/Online, 13 - 17 May 2024

- SE221 CISSP Fast Track Review, Virtual/Online, 17 - 21 June 2024

- SE221 CISSP Fast Track Review, Sydney, 22 - 26 July 2024

These news brief blog articles are collected at https://www.lesbell.com.au/blog/index.php?courseid=1. If you would prefer an RSS feed for your reader, the feed can be found at https://www.lesbell.com.au/rss/file.php/1/dd977d83ae51998b0b79799c822ac0a1/blog/user/3/rss.xml.

![]()

Copyright to linked articles is held by their individual authors or publishers. Our commentary is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License and is labeled TLP:CLEAR.

Copyright to linked articles is held by their individual authors or publishers. Our commentary is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License and is labeled TLP:CLEAR.

Welcome to today's daily briefing on security news relevant to our CISSP (and other) courses. Links within stories may lead to further details in the course notes of some of our courses, and will only be accessible if you are enrolled in the corresponding course - this is a shallow ploy to encourage ongoing study. However, each item ends with a link to the original source.

News Stories

New Wiper Targets Israeli Servers

As expected, the conflict in the Middle East continues to spill over into cyberspace, with a likely pro-Hamas hacktivist group now distributing malware which targets Linux systems in Israel.

Security Joes Incident Response Team, who volunteered to perform incident response forensics for Israeli companies, have discovered a new wiper targeting Linux systems in Israel. Dubbed BiBi-Linux because the string "Bibi" (a nickname referring to Israeli PM Netanyahu) is hardcoded in both the binary and the renamed files it overwrites, the program superficially looks like ransomware but makes no attempt to exfiltrate data to a C2 server, does not leave a ransom note, and does not use a reversible encryption algorithm. Instead, it simply overwrites every files with random data, renaming it with a random name and an extension that starts with "Bibi".

The software is designed for maximum efficiency, written in C/C++ and compiled to a 64-bit ELF executable, and it makes use of multithreading to overwrite as many files as quickly as possible. It is also very chatty, continuously printing details of its progress to the console, so the attackers simply invoke it at the command line using the nohup command to intercept SIGHUP signals and redirect its output to /dev/null, allowing them to detach the console and leave it running in the background.

Command-line arguments allow it to target specific folders, but it defaults to starting in the root directory, and if executed with root privileges, it would delete the entire system, with exception of a few filetypes it will skip, such as .out and .so, which it relies upon for its own execution (the binary is itself named bibi-linux.out).

Interestingly, this particular binary is recognized by only a few detectors on VirusTotal, and does not seem to have previously been analyzed.

The use of wipers is not uncommon in nation-state conflicts - NotPetya, for example, was not reversible even though it pretended to be - and Russia has continued to deploy many wipers against Ukrainian targets.

Given the use of "Bibi" in naming, and the targeting of Israeli companies, this malware was likely produced by a Hamas-affiliated hacktivist group. They would not be the only one; Sekoia last week detailed the operations of AridViper (also known as APT C-23, MoleRATs, Gaza Cyber Gang and Desert Falcon), another threat actor believed to be associated with Hamas.

Arid Viper seems to have been active since at least 2012, with first reporting on their activities in 2015 by Trend Micro, and they have been observed delivering data-exfiltration malware for Windows, iOS and Android via malmails to targets in Israel and the Middle East. Since 2020, Arid Viper has been using the PyMICROPSIA trojan and Arid Gopher backdoor, although earlier this month ESET reported the discovery of a new Rust-based backdoor called Rusty Viper, which suggests they are continuing to sharpen their tools.

Sekoia has done a deep dive on Arid Viper's C2 infrastructure as well as the victimology of their targets, who extend across both the Israeli and Arab worlds.

Security Joes, BiBi-Linux: A New Wiper Dropped By Pro-Hamas Hacktivist Group, blog post, 30 October 2023. Available online at https://www.securityjoes.com/post/bibi-linux-a-new-wiper-dropped-by-pro-hamas-hacktivist-group.

Sekoia Threat & Detection Research Team, AridViper, an intrusion set allegedly associated with Hamas, blog post, 26 October 2023. Available online at https://blog.sekoia.io/aridviper-an-intrusion-set-allegedly-associated-with-hamas/.

Upcoming Courses

- SE221 CISSP Fast Track Review, Virtual/Online, 13 - 17 November 2023

- SE221 CISSP Fast Track Review, Sydney, 4 - 8 December 2023

- SE221 CISSP Fast Track Review, Sydney, 11 - 15 March 2024

- SE221 CISSP Fast Track Review, Virtual/Online, 13 - 17 May 2024

- SE221 CISSP Fast Track Review, Virtual/Online, 17 - 21 June 2024

- SE221 CISSP Fast Track Review, Sydney, 22 - 26 July 2024

These news brief blog articles are collected at https://www.lesbell.com.au/blog/index.php?courseid=1. If you would prefer an RSS feed for your reader, the feed can be found at https://www.lesbell.com.au/rss/file.php/1/dd977d83ae51998b0b79799c822ac0a1/blog/user/3/rss.xml.

![]()

Copyright to linked articles is held by their individual authors or publishers. Our commentary is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License and is labeled TLP:CLEAR.

Copyright to linked articles is held by their individual authors or publishers. Our commentary is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License and is labeled TLP:CLEAR.

Welcome to today's daily briefing on security news relevant to our CISSP (and other) courses. Links within stories may lead to further details in the course notes of some of our courses, and will only be accessible if you are enrolled in the corresponding course - this is a shallow ploy to encourage ongoing study. However, each item ends with a link to the original source.

News Stories

The Risks of Artificial Intelligence

With AI all over the news in recent weeks, I thought it was time to do a bit of a deep dive on some of the risks posed by artificial intelligence. I'll cover just a few of the stories published over the last few days, before concluding with some deeper thoughts based on recent research.

OpenAI Prepares to Study "Catastrophic Risks"

OpenAI, the company behind the GPT-3 and -4 large language models, ChatGPT, and the Dall-E AI image generator, has started to assemble a team, called "Preparedness", which will

"tightly connect capability assessment, evaluations, and internal red teaming for frontier models, from the models we develop in the near future to those with AGI-level capabilities. The team will help track, evaluate, forecast and protect against catastrophic risks spanning multiple categories including:

- Individualized persuasion

- Cybersecurity

- Chemical, biological, radiological, and nuclear (CBRN) threats

- Autonomous replication and adaptation (ARA)

"The Preparedness team mission also includes developing and maintaining a Risk-Informed Development Policy (RDP). Our RDP will detail our approach to developing rigorous frontier model capability evaluations and monitoring, creating a spectrum of protective actions, and establishing a governance structure for accountability and oversight across that development process. The RDP is meant to complement and extend our existing risk mitigation work, which contributes to the safety and alignment of new, highly capable systems, both before and after deployment."

OpenAI, Frontier risk and preparedness, blog post, 26 October 2023. Available online at https://openai.com/blog/frontier-risk-and-preparedness.

Google Adds AI to Bug Bounty Programs

Google, which has a long history of AI research, has announced that it is adding its AI products to its existing Bug Hunter Program. Based on the company's earlier research and Red Team exercises, it has tightly defined several categories of attacks, such as prompt attacks, training data erxtraction, model manipulation, adversarial perturbation, model theft or extraction, etc. and have further developed a number of scenarios, some of which will be in scope for the Bug Hunter Program, and so of which will not.

Interestingly. jailbreaks and discovery of hallucinations, etc. will not be within scope, as Google's generative AI products already have a dedicated reporting channel for these content issues.

The firm has already given security research a bit of a boost with the publication of a short report which describes Google's "Secure AI Framework" and provided the categorisation described above, along with links to relevant research.

Vela, Eduardo, Jan Keller and Ryan Rinaldi, Google’s reward criteria for reporting bugs in AI products, blog post, 26 October 2023. Available online at https://security.googleblog.com/2023/10/googles-reward-criteria-for-reporting.html.

Fabian, Daniel, Google's AI Red Team: the ethical hackers making AI safer, blog post, 19 July 2023. Available online at https://blog.google/technology/safety-security/googles-ai-red-team-the-ethical-hackers-making-ai-safer/.

Fabian, Daniel and Jacob Crisp, Why Red Teams Play a Central Role in Helping Organizations Secure AI Systems, technical report, July 2023. Available online at https://services.google.com/fh/files/blogs/google_ai_red_team_digital_final.pdf.

Handful of Tech Firms Engaged in "Race to the Bottom"

So we are gently encouraged to assume, from these and other stories, that the leading AI and related tech firms already programs to mitigate the risks. Not so fast. One school of thought argues that these companies are taking a proactive approach to self-regulation for two reasons:

- Minimize external regulation by governments, which would be more restrictive than they would like

- Stifle competition by increasing costs of entry

In April, a number of researchers published an open letter calling for a six-month hiatus on experiments with huge models. One of the organizers, MIT physics professor and AI researcher Max Tegmark, is highly critical:

"We’re witnessing a race to the bottom that must be stopped", Tegmark told the Guardian. "We urgently need AI safety standards, so that this transforms into a race to the top. AI promises many incredible benefits, but the reckless and unchecked development of increasingly powerful systems, with no oversight, puts our economy, our society, and our lives at risk. Regulation is critical to safe innovation, so that a handful of AI corporations don’t jeopardise our shared future."

Along with other researchers, Tegmark has called for governments to licence AI models and - if necessary - halt their development:

"For exceptionally capable future models, eg models that could circumvent human control, governments must be prepared to license their development, pause development in response to worrying capabilities, mandate access controls, and require information security measures robust to state-level hackers, until adequate protections are ready."

Milmo, Dan and Edward Helmore, Humanity at risk from AI ‘race to the bottom’, says tech expert, The Guardian, 26 October 2023. Available online at https://www.theguardian.com/technology/2023/oct/26/ai-artificial-intelligence-investment-boom.

The Problem is Not AI - It's Energy

For many, the threat of artificial intelligence takes a back seat to the other existential threat of our times: anthropogenic climate change. But what if the two are linked?

The costs of running large models, both in the training phase and for inference once in production, are substantial; in fact, without substantial injections from Microsoft and others, OpenAI's electricity bills would have rendered it insolvent months ago. According to a study by Alex de Vries, a PhD candidate at VU Amsterdam, the current trends of energy consumption by AI are alarming:

"Alphabet’s chairman indicated in February 2023 that interacting with an LLM could “likely cost 10 times more than a standard keyword search. As a standard Google search reportedly uses 0.3 Wh of electricity, this suggests an electricity consumption of approximately 3 Wh per LLM interaction. This figure aligns with SemiAnalysis’ assessment of ChatGPT’s operating costs in early 2023, which estimated that ChatGPT responds to 195 million requests per day, requiring an estimated average electricity consumption of 564 MWh per day, or, at most, 2.9 Wh per request. ...

"These scenarios highlight the potential impact on Google’s total electricity consumption if every standard Google search became an LLM interaction, based on current models and technology. In 2021, Google’s total electricity consumption was 18.3 TWh, with AI accounting for 10%–15% of this total. The worst-case scenario suggests Google’s AI alone could consume as much electricity as a country such as Ireland (29.3 TWh per year) [my emphasis], which is a significant increase compared to its historical AI-related energy consumption. However, this scenario assumes full-scale AI adoption utilizing current hardware and software, which is unlikely to happen rapidly."

Others, such as Roberto Verdecchia at the University of Florence, think de Vries' predictions may even be conservative, saying, "I would not be surprised if also these predictions will prove to be correct, potentially even sooner than expected".

A cynic might wonder: why does artificial intelligence consume so much power when the genuine article - the human brain - operates on a power consumption of only 12W? It really is quite remarkable, when you stop to think about it.

de Vries, Alex, The growing energy footprint of artificial intelligence, Joule, 10 October 2023. DOI:https://doi.org/10.1016/j.joule.2023.09.004. Available online at https://www.cell.com/joule/fulltext/S2542-4351(23)00365-3.

The Immediate Risk

Having canvassed just some of the recent news coverage of AI threats and risks, let me turn now to what I consider the biggest immediate risk of the current AI hype cycle.

Current large language models (LLM's) are generative pretrained transformers, but most people do not really understand what a transformer is and does.

A transformer encodes information about word position before it feeds it into a deep learning neural network, allowing the network to learn from the entire input, not just words within a limited distance of each other. Secondly, transformers employ a technique called attention - particularly a derivative called self-attention - which allows the output stages of the transformer to refer back to the relevant word in the input sentence as it produces output.

The result - which leads to the key risk - is the impressive performance in conversational tasks, which can seduce non-technical business users into thinking they are a general artificial intelligence - but this is far from the case. In fact, most LLM's work on the statistical properties of the text they are trained on and do not understand it in any way. In this respect, they are actually very similar to the compression algorithms used to encode text, speech, music and graphics for online transmission (Delétang, et. al., 2023). In the same way as the decompression algorithm predicts the colour of the next pixel in a graphics image, so an LLM predicts the mostly likely next word, based upon the statistical properties of the text it has been trained upon. However, this is not always le mot juste.

For example, when Princeton researchers asked Open AI's GPT-4 LLM to multiply 128 by 9/5 and add 32, it was able to give the correct answer. But when asked to multiply 128 by 7/5 and add 31, it gave the wrong answer. The reason is that the former example is the well-known conversion from centigrade to fahrenheit, and so its training corpus had included lots of examples, while the second example is probably unique. GPT-4 simply picked a likely number - it did not perform the actual computation (McCoy et. al., 2023).

Another example found by the researchers was a simple task of deciphering text encrypted using the Caesar Cipher; GPT-4 easily performed the task when the key was 13, because that value is used for ROT-13 encoding on Usenet newsgroups - but a key value of 12, while returning recognizable English-language text, gave the incorrect text.

In short, large language models do not understand the subject matter of the text they process.

Many managers either have never realized this, or forget it in their enthusiasm. And right now, that is the real risk of AI.

Delétang, Grégoire, et. al., Language Modeling Is Compression, arXiv preprint, 19 September 2023. Available online at https://arxiv.org/abs/2309.10668.

McCoy, R. Thomas, Shunyu Yao, Dan Friedman, Matthew Hardy and Thomas L. Griffiths, Embers of Autoregression: Understanding Large Language Models Through the Problem They are Trained to Solve, arXiv preprint, 24 September 2023. Available online at https://arxiv.org/abs/2309.13638.

Upcoming Courses

- SE221 CISSP Fast Track Review, Virtual/Online, 13 - 17 November 2023

- SE221 CISSP Fast Track Review, Sydney, 4 - 8 December 2023

- SE221 CISSP Fast Track Review, Sydney, 11 - 15 March 2024

- SE221 CISSP Fast Track Review, Virtual/Online, 13 - 17 May 2024

- SE221 CISSP Fast Track Review, Virtual/Online, 17 - 21 June 2024

- SE221 CISSP Fast Track Review, Sydney, 22 - 26 July 2024

These news brief blog articles are collected at https://www.lesbell.com.au/blog/index.php?courseid=1. If you would prefer an RSS feed for your reader, the feed can be found at https://www.lesbell.com.au/rss/file.php/1/dd977d83ae51998b0b79799c822ac0a1/blog/user/3/rss.xml.

![]()

Copyright to linked articles is held by their individual authors or publishers. Our commentary is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License and is labeled TLP:CLEAR.

Copyright to linked articles is held by their individual authors or publishers. Our commentary is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License and is labeled TLP:CLEAR.

Welcome to today's daily briefing on security news relevant to our CISSP (and other) courses. Links within stories may lead to further details in the course notes of some of our courses, and will only be accessible if you are enrolled in the corresponding course - this is a shallow ploy to encourage ongoing study. However, each item ends with a link to the original source.

News Stories

FBI Warns of Chinese and Russian Cyber-Espionage

The FBI, in conjunction with other Five Eyes agencies, has warned of increasing intellectual property theft, including cyber-espionage, by both China and Russia, particularly targeting high tech companies and universities engaged in areas such as space research, AI, quantum computing and synthetic biology. China, in particular, has "long targeted business with a web of techniques all at once: cyber intrusions, human intelligence operations, seemingly innocuous corporate investments and transactions", said FBI Director Christopher Wray.

The FBI, in conjunction with the US Air Force Office of Special Investigations and the National Counterintelligence and Security Center (NCSC), has published a Counterintelligence Warning Memorandum detailing the threats faced by the US space industry, but we can safely assume that other tech sectors face similar difficulties. The report, entitled "Safeguarding the US Space Industry: Keeping Your Intellectual Property in Orbit" lists the variety of impacts caused by espionage in areas such as global competition, national security and economic security, then goes on to detail indicators that an organization is targeted along with suggested mitigation actions. The details of reporting contact points are US-specific, but it is not difficult to find the corresponding agencies in other countries.

FBI, AF OSI and NCSC, Safeguarding the US Space Industry: Keeping Your Intellectual Property in Orbit, counterintelligence warning memorandum, October 2023. Available online at https://www.dni.gov/files/NCSC/documents/SafeguardingOurFuture/FINAL%20FINAL%20Safeguarding%20the%20US%20Space%20Industry%20-%20Digital.pdf.

Middle East Conflict Spills Over Into DDoS Attacks

Earlier this month we wrote about the HTTP/2 Rapid Reset attack, which was used to deliver massive layer 7 distributed denial of service attacks to a number of targets. Cloudflare reported a peak of 201 million requests per second. In its latest report, the network firm reports that it saw an overall increase of 65% in HTTP DDoS attack traffic in Q3 of 2023, by comparison to the previous quarter - due in part to the layer 7 Rapid Reset attacks. Layer 3 and 4 DDoS attacks increased by 14%, with numerous attacks in the terabit/second range, the largest peaking at 2.6 Tbps.

The largest volume of HTTP DoS traffic was directed at gaming and online gambling sites, which have long been a favourite of DDoS extortion operators. Although the US remains the largest source of DDoS traffic, at 15.8% of the total, China is not far behind with 12.6%, followed by Brazil up from fourth place at 8.7% and Germany, which has slipped from third place, at 7.5%.

In other news that will likely surprise no-one, only 12 minutes after Hamas launched rocket attacks into Israel on 7 October Clouflare's systems detected and mitigates DDoS attacks on Israeli websites that provide alerts and critical information to civilians on rocket attacks. The initial attack peaked at 100k RPS and lasted ten minutes, but was followed 45 minutes later by a much larger six-minute attack which peaked at 1M RPS.

In addition, Palestinian hacktivist groups engaged in other attacks, such as exploiting a vulnerability in the "Red Alert: Israel" warning app.

In the days since, DDOS attacks on Israeli web sites have continued, mainly targeting newspaper and media sites, as well as the software industry and financial sector.

However, there are attacks in the other direction; since the beginning of October, Cloudflare has detected and mitigated over 454 million HTTP DDoS attack requests targeting Palestinian web sites. Although this is only one-tenth of the volume of attack requests directed at Israel, it is a larger proportion of the traffic sent to Palestinian web sites; since 9 October nearly 6 out of every 10 HTTP requests to Palestinian sites were DDoS attack traffic.

Yoachmik, Omer and Jorge Pacheco, Cyber attacks in the Israel-Hamas war, blog post, 24 October 2023. Available online at https://blog.cloudflare.com/cyber-attacks-in-the-israel-hamas-war/.

Yoachmik, Omer and Jorge Pacheco, DDoS threat report for 2023 Q3, blog post, 27 October 2023. Available online at https://blog.cloudflare.com/ddos-threat-report-2023-q3/.

Upcoming Courses

- SE221 CISSP Fast Track Review, Virtual/Online, 13 - 17 November 2023

- SE221 CISSP Fast Track Review, Sydney, 4 - 8 December 2023

- SE221 CISSP Fast Track Review, Sydney, 11 - 15 March 2024

- SE221 CISSP Fast Track Review, Virtual/Online, 13 - 17 May 2024

- SE221 CISSP Fast Track Review, Virtual/Online, 17 - 21 June 2024

- SE221 CISSP Fast Track Review, Sydney, 22 - 26 July 2024

These news brief blog articles are collected at https://www.lesbell.com.au/blog/index.php?courseid=1. If you would prefer an RSS feed for your reader, the feed can be found at https://www.lesbell.com.au/rss/file.php/1/dd977d83ae51998b0b79799c822ac0a1/blog/user/3/rss.xml.

![]()

Copyright to linked articles is held by their individual authors or publishers. Our commentary is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License and is labeled TLP:CLEAR.

Copyright to linked articles is held by their individual authors or publishers. Our commentary is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License and is labeled TLP:CLEAR.

Welcome to today's daily briefing on security news relevant to our CISSP (and other) courses. Links within stories may lead to further details in the course notes of some of our courses, and will only be accessible if you are enrolled in the corresponding course - this is a shallow ploy to encourage ongoing study. However, each item ends with a link to the original source.

News Stories

New Attack Extracts Credentials from Safari Browser (But Don't Panic!)

Most security pros will doubtless remember the original speculative execution attacks, Spectre and Meltdown, which were first disclosed in early 2018. These attacks exploit the side effects of speculative, or out-of-order, execution, a feature of modern processors.

One design challenge for these CPU's is memory latency - reading the computer's main memory across an external bus is slow by comparison with the internal operation of the processor, and the designs deal with this by making use of one or more layers of on-chip cache memory. Much of the time, programs execute loops, for example, and so the first time through the loop, the relevant code is fetched and retained in cache so that subsequent executions of the loop body fetch the code from the cache and not main memory.

In fact, modern processors also feature a pipelined architecture, pre-fetching and decoding instructions (e.g. pre-fetching required data from memory) ahead of time, and they are also increasingly parallelized, featuring multiple execution units or cores. The Intel i9 CPU of the machine I am typing this on, for example, has 16 cores, and 8 of those each has two independent sets of registers to make context switching even faster (a feature Intel calls hyperthreading) so that it looks like 24 logical processors. All those processors need to be kept busy, and so they may fetch and execute instructions from the pipeline in a different order from the way they were originally fetched and placed in the pipeline, especially if an instruction is waiting for the required data to be fetched.

One aspect of this performance maximization is branch prediction and speculative execution. A branch predictor circuit attempts to guess the likely destination of a branch instruction ahead of time, for example, if the branch logic depends on the value of a memory location that is in the process of being read. Then having predicted this, the CPU will set out executing the code starting at the guessed branch destination. One downside is that sometimes the branch predictor gets it wrong, resuling in a branch misprediction, so this computation is done using yet another set of registers which will be discarded in the event of a misprediction (or committed, i.e. switched for the main register set, if the prediction was correct). This technique is referred to as speculative execution, and it is possible because the microarchitecture that underlies x86_64 complex instruction set (CISC) processors is really a RISC (reduced instruction set complexity) processor with a massive number of registers.

The result is an impressive performance boost, but it has a number of side-effects. For example, a branch misprediction will cause a delay of between 10 and 20 CPU clock cycles as registers are discarded and the pipeline refilled, so there will be a timing effect. It will also leave traces in the cache - instructions that were fetched but not executed, for example. And if a branch prediction led to, for example, a system call into the OS kernel, then a low-privilege user process may have fetched data from a high-privilege, supervisor mode, address into a CPU register.

These side-effects were exploited by the Spectre and Meltdown exploits of 2018, which caused some panic at the time; AMD's stock fell dramatically (leading some observers to suspect that the objective of related publicity was stock manipulation) while the disabling of speculative execution led to a dramatic rise in the cost of cloud workloads as more cores were required to carry the load. In due course, the processor manufacturers introduced various hardware mitigations, and we have gradually forgotten they were a problem.

You could think of these attacks as being related to the classic trojan horse problem in multi-level security systems, in which a high security level process passes sensitive data to a low security level process via a covert channel; they are probably most similar to the timing covert channel (in fact, most variants of these attacks make use of various timers in the system, just like the low security level part of the trojan horse). The essential distinction is that in the case of the trojan horse, the high security level process is a willing participant; in this case, it is not. Even secure-by-design, well-written programs will leak information via speculative execution and related attacks.

While the original investigation that led to Spectre and Meltdown was done on the Intel/AMD x86_64 architecture, Apple's silicon foundry has been doing some amazing work with the M series processors used in recent Macbooks, Mac minis, iMacs and iPads, not to mention the earlier A series which powered the iPhone, earlier iPads and Apple TV. Could the Apple Silicon processors be similarly vulnerable? The M1 and M2 processors, in particular, were produced after these attacks were known, and incorporate some mitigation features, such as 35-bit addressing and value poisoning (e.g. setting bit 49 of 64-bit addresses so they are offset \(2^{49}\) bytes too high).

A group of researchers from Georgia Tech, University of Michigan and Ruhr University Bochum - several of whom had been involved in the earlier Spectre and Meltdown research - turned their attention to this problem a couple of years ago and found that, yes - these CPU's were exploitable. In particular, they were able to get round the hardware mitigations as well as the defenses in the Safari browser, such as running different browser tabs in different processes (hence different address spaces).

On the web site they created for their attack, the researchers write:

We present iLeakage, a transient execution side channel targeting the Safari web browser present on Macs, iPads and iPhones. iLeakage shows that the Spectre attack is still relevant and exploitable, even after nearly 6 years of effort to mitigate it since its discovery. We show how an attacker can induce Safari to render an arbitrary webpage, subsequently recovering sensitive information present within it using speculative execution. In particular, we demonstrate how Safari allows a malicious webpage to recover secrets from popular high-value targets, such as Gmail inbox content. Finally, we demonstrate the recovery of passwords, in case these are autofilled by credential managers.

Their technical paper lists the work involved in combining a variety of techniques to create the iLeakage attack:

Summary of Contributions. We contribute the following:

- We study the cache topology, inclusiveness, and speculation window size on Apple CPUs (Section 4.1, 4.2, and 4.5).

- We present a new speculative-execution technique to timerlessly distinguish cache hits from misses (Section 4.3).

- We tackle the problem of constructing eviction sets in the case of low resolution or even non-existent timers, adapting prior approaches to work in this setting (Section 4.4).

- We demonstrate timerless Spectre attack PoCs with near perfect accuracy, across Safari, Firefox and Tor (Section 4.6).

- We mount transient-execution attacks in Safari, showing how we can read from arbitrary 64-bit addresses despite Apple’s address space separation, low-resolution timer, caged objects with 35-bit addressing, and value poisoning countermeasures (Section 5).

- We demonstrate an end-to-end evaluation of our attack, showing how attackers can recover sensitive website content as well as the target’s login credentials (Section 6).

In essence, the iLeakage exploit can be implemented in either JavaScript or WebAssembly, and will allow an attacker's malicious web page, runing in a browser tab, to recover the desired data from another page. In order for this to work, the victim must visit a malicious attack site, and the exploit code needs to monitor the hardware for cache hits vs cache misses, which will take around five minutes. Once it has done this, it can then use the window.open() function to open pages which will share the rendering process with the attacker page, making its memory accessible. Even if the user closes the page, the attack will continue, since the memory is not reclaimed immediately.

The researchers disclosed their technique to Apple on 12 September 2022, upon which Apple requested an embargo on the publication of their work and set about refactoring the Safari multi-process architecture to include mitigation features. As of today, the mitigation feature is present in Safari Technology Preview versions 173 and newer, but is not enabled by default and is hidden in an internal debug menu. Users of MacOS Sonoma can enable it fairly easily, but users of earlier versions who have not updated will first need to download and install the appropriate Safari Technology Preview.

In addition to their deeply technical paper, which will be presented at CCS '28 in Copenhagen late next month, the researchers have set up a web site which is much easier to follow, where you can find their FAQ which explains the attack and also presents step-by-step instructions for enabling mitigation in Safari. There are also some videos demonstrating the attack in practice.

There is no need to panic, however; this is an extremely complex and technically challenging attack technique which depends upon a deep understanding of both the Apple Silicon processor architecture and the internals of the Safari browser (it will not work against other browsers, for example, although it could be adapted). A real attack in the wild is vanishingly improbable, and in fact, by the time that a threat actor could come up with one, it is likely that we will all have moved on to new processors with effective mitigations in hardware.

Kim, Jason, Stephan van Schaik, Daniel Genkin and Yuval Yarom, iLeakage: Browser-based Timerless Speculative Execution Attacks on Apple Devices, CCS '23, Copenhagen, Denmark, 26 - 30 November 2023. Available online at https://ileakage.com/files/ileakage.pdf.

Kim, Jason, Stephan van Schaik, Daniel Genkin and Yuval Yarom, iLeakage: Browser-based Timerless Speculative Execution Attacks on Apple Devices, web site, October 2023. Available at https://ileakage.com/.

Upcoming Courses

- SE221 CISSP Fast Track Review, Virtual/Online, 13 - 17 November 2023

- SE221 CISSP Fast Track Review, Sydney, 4 - 8 December 2023

- SE221 CISSP Fast Track Review, Sydney, 11 - 15 March 2024

- SE221 CISSP Fast Track Review, Virtual/Online, 13 - 17 May 2024

- SE221 CISSP Fast Track Review, Virtual/Online, 17 - 21 June 2024

- SE221 CISSP Fast Track Review, Sydney, 22 - 26 July 2024

These news brief blog articles are collected at https://www.lesbell.com.au/blog/index.php?courseid=1. If you would prefer an RSS feed for your reader, the feed can be found at https://www.lesbell.com.au/rss/file.php/1/dd977d83ae51998b0b79799c822ac0a1/blog/user/3/rss.xml.

![]()

Copyright to linked articles is held by their individual authors or publishers. Our commentary is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License and is labeled TLP:CLEAR.

Copyright to linked articles is held by their individual authors or publishers. Our commentary is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License and is labeled TLP:CLEAR.