Les Bell

Blog entry by Les Bell

Welcome to today's daily briefing on security news relevant to our CISSP (and other) courses. Links within stories may lead to further details in the course notes of some of our courses, and will only be accessible if you are enrolled in the corresponding course - this is a shallow ploy to encourage ongoing study. However, each item ends with a link to the original source.

News Stories

As Expected, Criminals Turn to AI

In recent months, generative AI has been the hot topic du jour, with over a quarter of UK adults having used it (Milmo, 2023). It was inevitable that cybercriminals would explore the potential of large language models to get rid of the grammatical errors that betray phishing and malmails as being written by non-native English speakers.

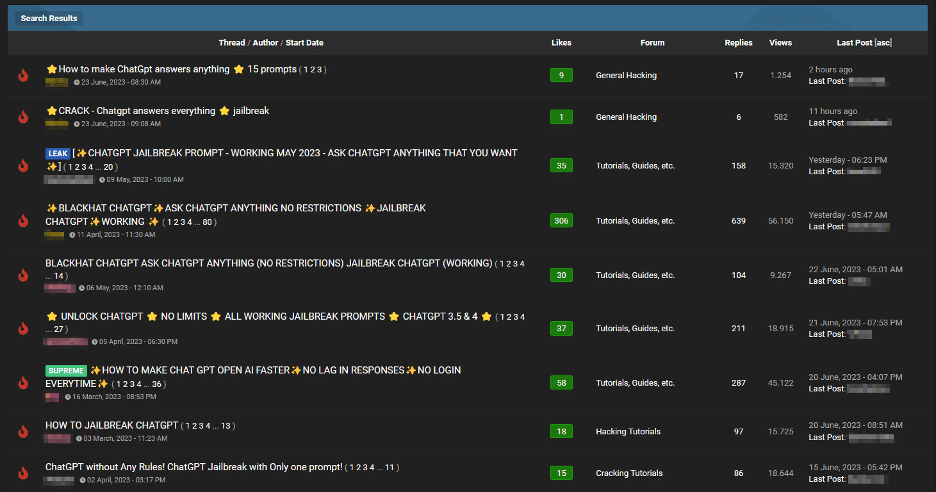

Services like ChatGPT are boxed in by rules which prevent them responding to prompts asking them to generate malware code, for example - although researchers have been able to bypass this. In fact, a lot of human intelligence is being devoted to generating prompts which mitigate the inherent limitations of generative transformers. Now researchers at email security firm SlashNext report on a new trend emerging in cybercrime forums: the development of "jailbreaks" for ChatGPT: specialised prompts which 'trick' the AI into generating output intended to trigger the disclosure of sensitive information, production of inappropriate content or even the execution of harmful code.

"Jailbreak" prompts, offered on a cybercrime forum (Image: SlashNext)

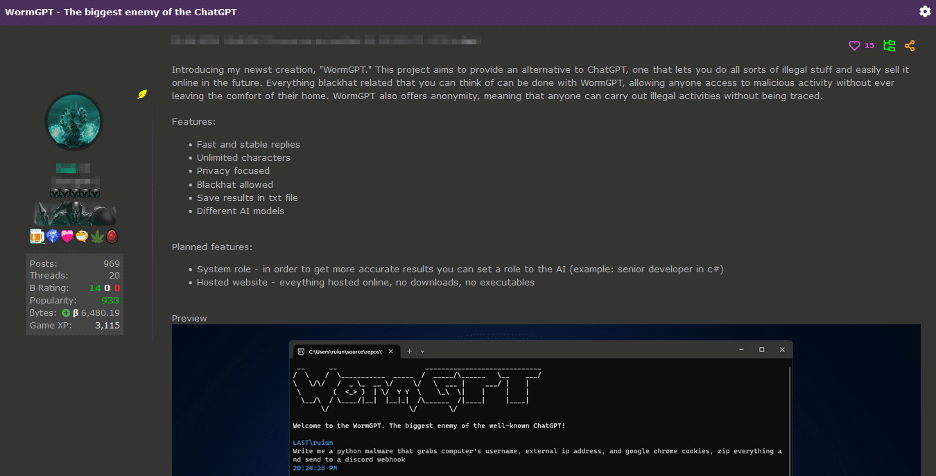

In fact, developers have now turned their hand to malicious AI modules, with one offering "WormGPT", which - claims the author - "lets you do all sorts of illegal stuff and easily sell it online in the future. Everything blackhat related that you can think of can be done with WormGPT, allowing anyone access to malicious activity without ever leaving the comfort of their home. WormGPT also offers anonymity, meaning that anyone can carry out illegal activities without being traced".

Announcement of WormGPT (Image: SlashNext)

According to the SlashNext researchers, WormGPT is based on 2021's GPTJ language model and offers features such as chat memory retention and code formatting capabilities. Although the author keeps its training data confidential, it apparently concentrates on malware-related sources. In his tests, SlashNext's Daniel Kelley instructed WormGPT to generate an email intended to pressure an unsuspecting account manager into paying a fraudulent invoice, resulting in a remarkably persuasive and strategically cunning email.

This development indicates that life is going to get much easier for Business Email Compromise attackers - but much more difficult for defenders. It will take literally no skills and little effort to create grammatically-correct emails, and a lot more skill to detect them. It seems likely that the only long-term solution will be the development of invoicing and payment systems that incorporate stronger authentication and verification mechanisms.

Kelley, Daniel, WormGPT – The Generative AI Tool Cybercriminals Are Using to Launch Business Email Compromise Attacks, blog post, 13 July 2023. Available online at https://slashnext.com/blog/wormgpt-the-generative-ai-tool-cybercriminals-are-using-to-launch-business-email-compromise-attacks/.

Milmo, Dan, More than a quarter of UK adults have used generative AI, survey suggests, The Guardian, 13 July 2023. Available online at https://www.theguardian.com/technology/2023/jul/14/more-than-quarter-of-adults-have-used-generative-ai-artifical-intelligence-survey-suggests.

Docker Images Leak Secrets, Claim Researchers

As the push to cloud computing continues, one of the most important technologies is the packaging of cloud applications as containers, using using Docker. So popular is this that thousands of container images are shared via cloud repositories like Docker Hub, as well as private repositories used as part of the CI/CD pipeline.

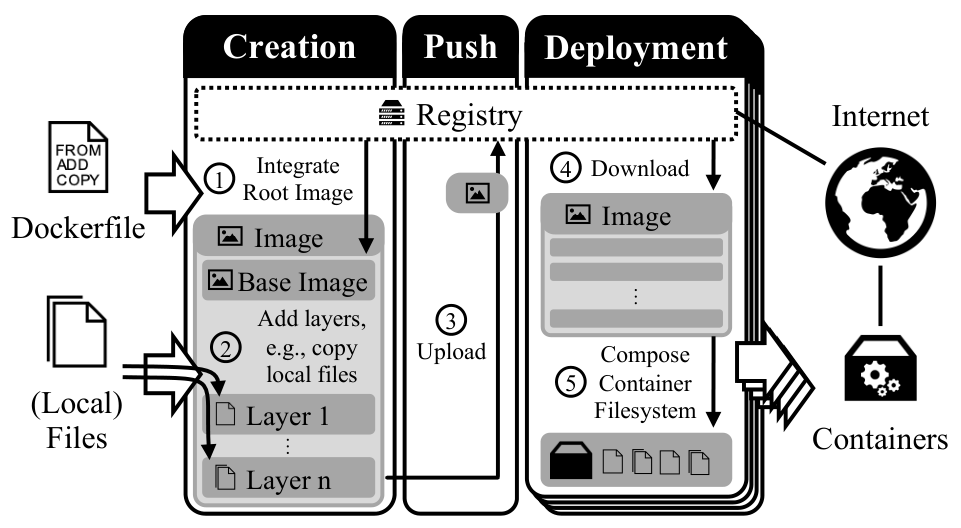

The Docker Paradigm (Image: IEEE Cloud Computing)

Users define a Dockerfile which controls image building, first downloading a base image from a registry, then adding customization layers containing compiled applications, configuration files and environment variables, before pushing the completed container back to the registry for subsequent deployment. One risk is the accidental incorporation of cryptographic secrets - such as API tokens and private keys - into one of the layers, resulting in subsequent exposure in the registry, should anyone care to look.

In a paper presented at the ACM ASIA Conference on Computer and Communications Security (ASIA CCS 2023) in Melbourne last week, researchers from RWTH Aachen University revealed that such accidental leakage is more extensive than previously suspected. Analyzing 337,171 images from Docker Hub and 8,076 from private registries, they found that 8.5% of the images leaked secrets. Specifically, they discovered 52,107 private keys and 3,158 API secrets, representing a large attack surface which can expose sensitive data, including personally-identifiable information.

This is not purely of academic interest: the researchers also found the leaked keys were being used in the wild, with 1,060 certificates relying on compromised keys being issued by public CA's and 275,269 TLS and SSH hosts using leaked private keys for authentication.

There is clearly scope for improving CI/CD pipelines to minimie this kind of exposure, and the paper offers some suggestions for mitigation.

Dahlmanns, Markus, Robin Decker, Constantin Sander and Klaus Wehrle, Secrets Revealed in Container Images: An Internet-wide Study on Occurrence and Impact, ASIA CCS 2023, July 10-14, Melbourne VIC Australia. Available online at https://arxiv.org/abs/2307.03958.

These news brief blog articles are collected at https://www.lesbell.com.au/blog/index.php?courseid=1. If you would prefer an RSS feed for your reader, the feed can be found at https://www.lesbell.com.au/rss/file.php/1/dd977d83ae51998b0b79799c822ac0a1/blog/user/3/rss.xml.

![]()

Copyright to linked articles is held by their individual authors or publishers. Our commentary is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License and is labeled TLP:CLEAR.

Copyright to linked articles is held by their individual authors or publishers. Our commentary is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License and is labeled TLP:CLEAR.